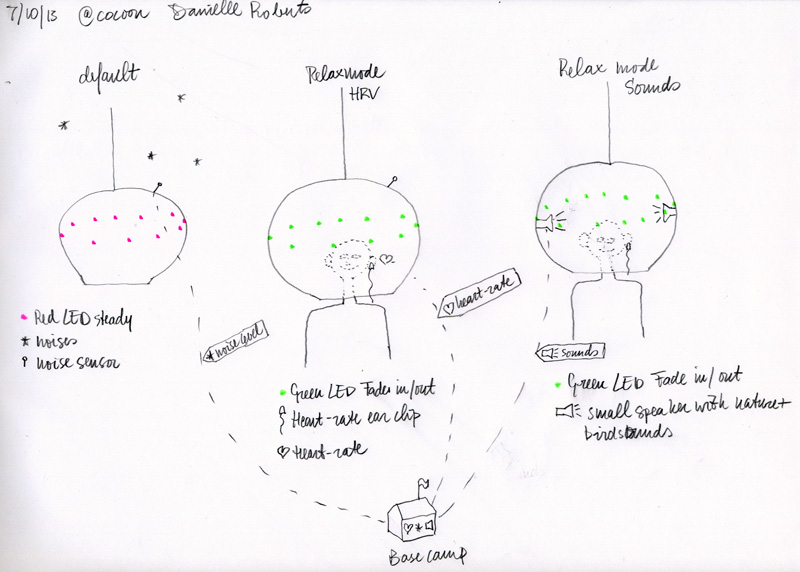

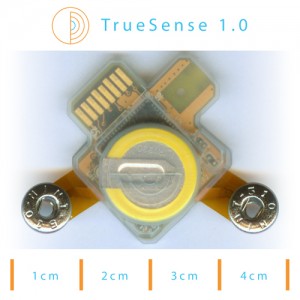

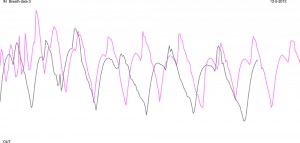

On 23 and 24 October I took part in an isolation mission inside a spaceship simulation. This mission is part of the social design project Seeker by Belgian artist Angelo Vermeulen. A group of architecture master students from the TU/e worked on an exploration of living in space and build an experimental spaceship. The aim is to research how ecology, sociology and technology can merge and provide integrated living environments for the future. I’ve build a relaxation device for the astronauts.

Part of this project is an isolation mission where a crew enters the spaceship and is locked in for two days. During that time they are completely self-sufficient and no input from outside is allowed. I was one of the four crew members. My main motivation for joining was to learn more about self-sufficient living and to test my relaxation device.

Being locked in with three other people I hardly knew seemed quite daunting to me as I’m kind of a hermit and have experience with living alone for a month. The space was quite small. The public could look inside and there was no privacy what soever.

The first thing that became clear when we entered was that there was so much to do. This was going to be our home for the next few days so we had to make it tidy and cosy. But we started out with an introduction round, as we didn’t know each other. Amazingly enough that turned into a good conversation. It appeared we all were interested in spirituality and that became the theme for the mission. After the talk I seemed like we’d known each other for a long time. Angelo said that his team at the HI SEAS mission also immediately had a click. But maybe it is also the setting, being dependent on each other that creates a bond? Or maybe an experiment like this attacks like-minded people?

We were told to bring books and magazines but it turned out we had absolutely no time for reading. From the first moment it was if I’d entered a pressure cooker. All my senses were sharp and my brain was going full speed. Living in such an environment is difficult. Everything is cramped and living has a camping feel to it. Tasks take an awful lot of time to complete (cooking took 2 to 2.5 hours).

The whole experience took me back to my childhood. I used to like to make things but we didn’t have so much proper tools at home. So I just made do with what was there. It makes one very inventive. I suppose that is where my artistic roots lay. I really loved the feeling of being so challenged. And I love the solutions we all came up with.

The collaborative part was really inspiring. I loved the merging of the relaxation device with the workout / energy generation. The students came up with making the water usage visible using transparent water bottles. I extended that with making the waste bins transparent. It is such an easy way to become aware of your behaviour.

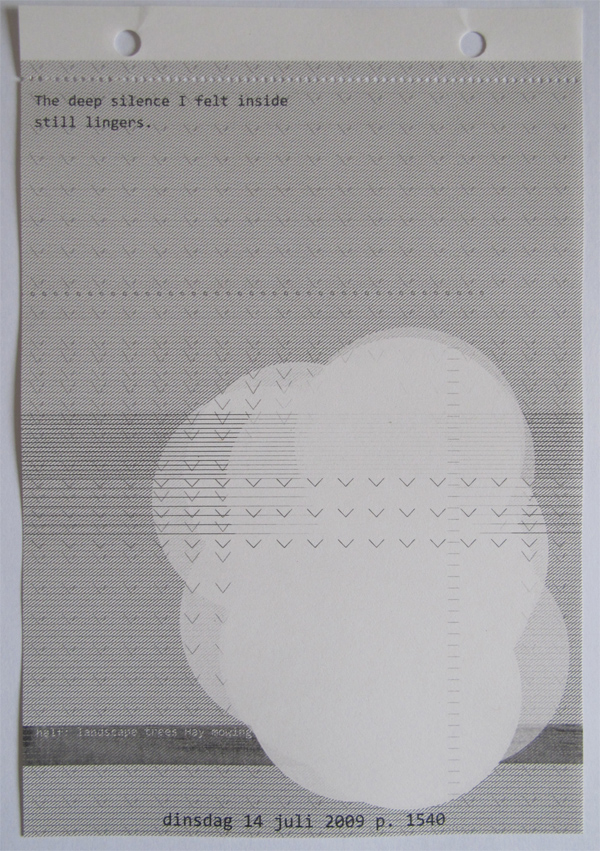

The meals were great, we even ate an original HI SEAS recipe. We also got some lovely bread from the bakery next door. And Angelo’s nano whine was a real mystery. (We had to put the wine in the microwave to get different tastes, it really worked!) I suppose I loved our conversations the best. From that we decided I’d give a little introduction to Zen meditation. So we enjoyed a couple of minutes silence between us. With people looking through the window, that was very special.

For me personally it was good to see that I have qualities as a hermit as well being a team player. Working, thinking and talking together really made this a rich experience. But I do see now why in monasteries they have a strict timetable. That makes it a lot easier to get things done. For me that would be the next step to explore and experiment with.