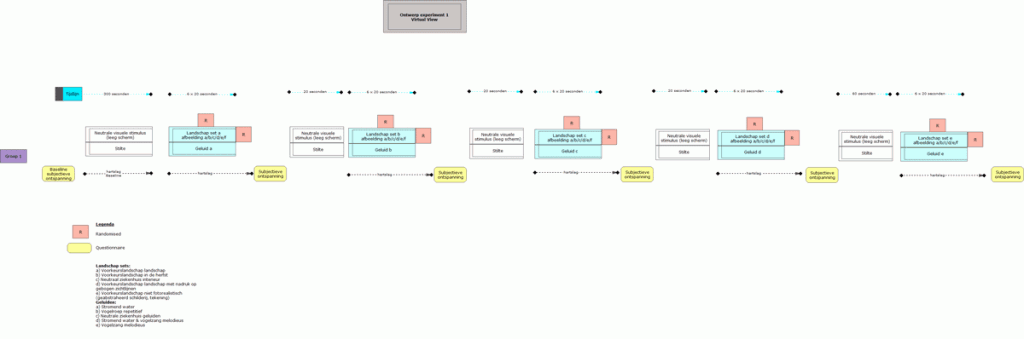

In this post I want to give an overview of the results of the first and I will spare you the heavy statistic speak. So don’t expect a scientific article. The data is there and I may write a proper article one day but it isn’t appropriate for this blog.

Together with Hein from the Open University I looked at the data from the first experiment. This is an exploratory experiment so we’re looking for trends and directions to take with us to the next step.

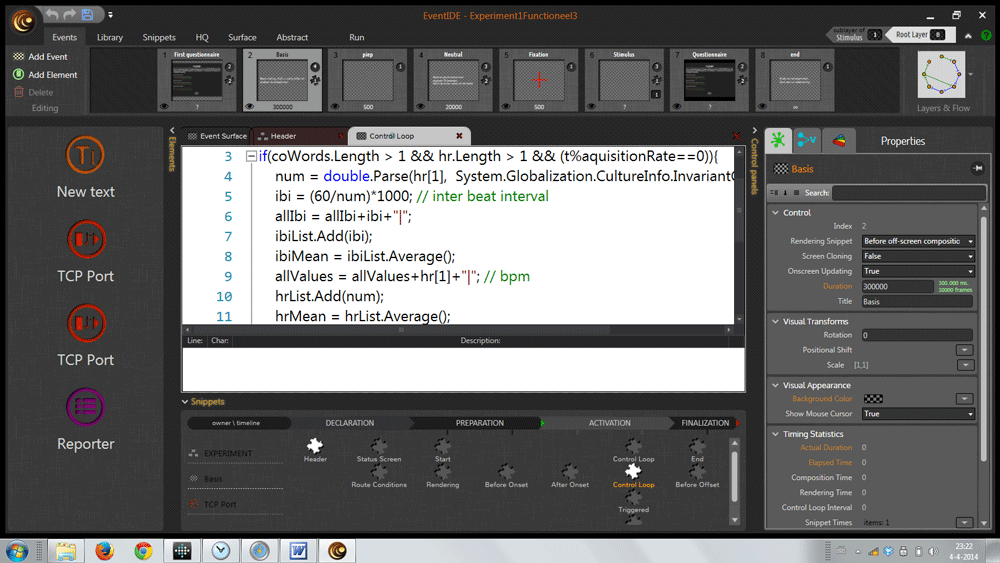

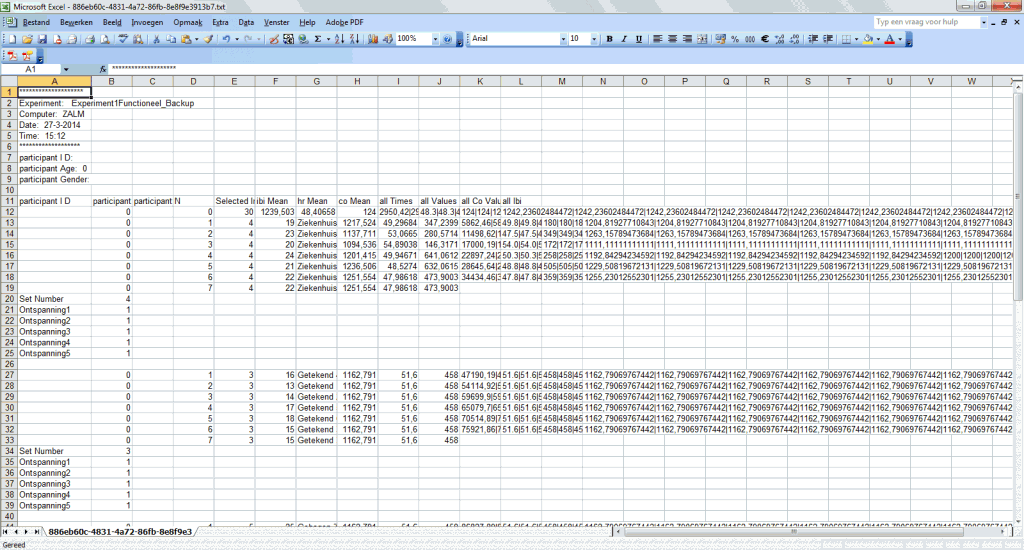

The students did a splendid job organizing the dataset. For each participant there was basic demographic data (gender and age) means and combined means for the perceived relaxation questions, the separate images and images combined in sets. For each set there are means for: beats per minute (BPM), the inter beat interval (IBI) and heart-coherence.

To make sure our self constructed questionnaire was valid I did a scale reliability test. All the sets had good reliability for all 5 questionnaires. This just means that there is an internal consistency between the questions. The questionnaire it self isn’t validated for measuring relaxation. We just asked the three questions.

We did 4 analyses on the four variables: perceived relaxation (measured with the questionnaires), BPM, IBI and heart-coherence.

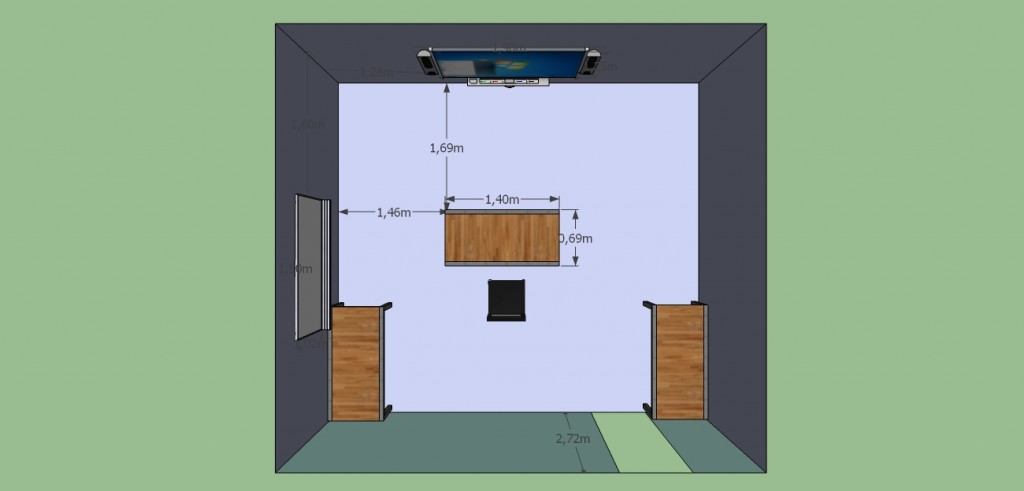

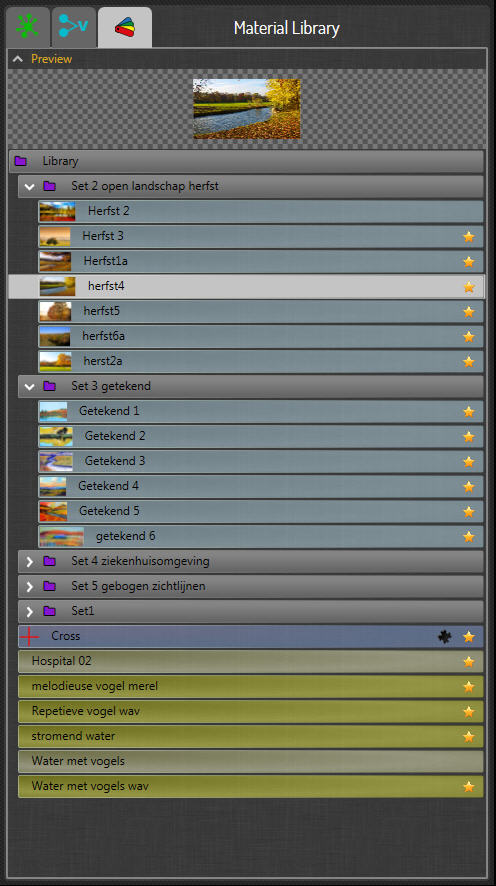

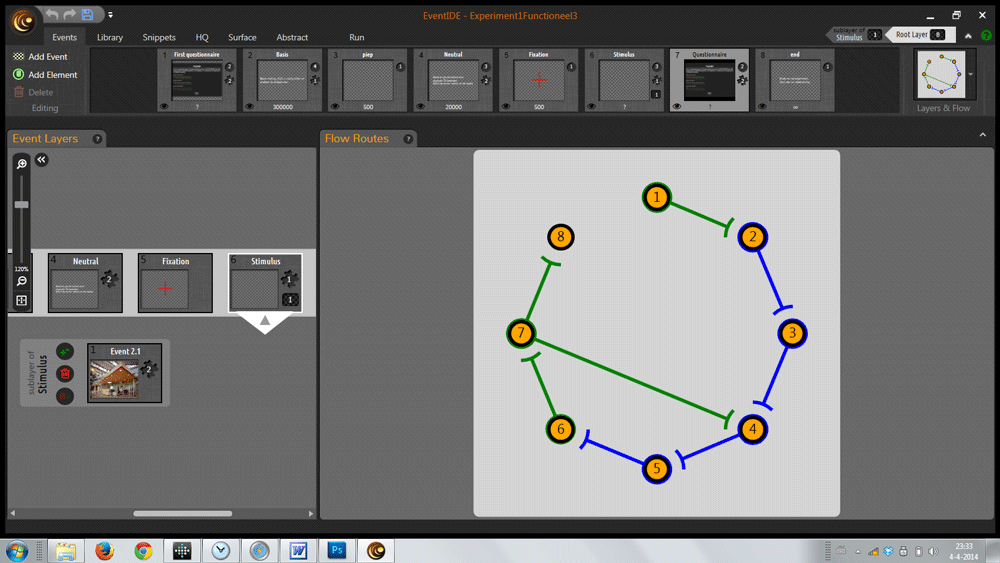

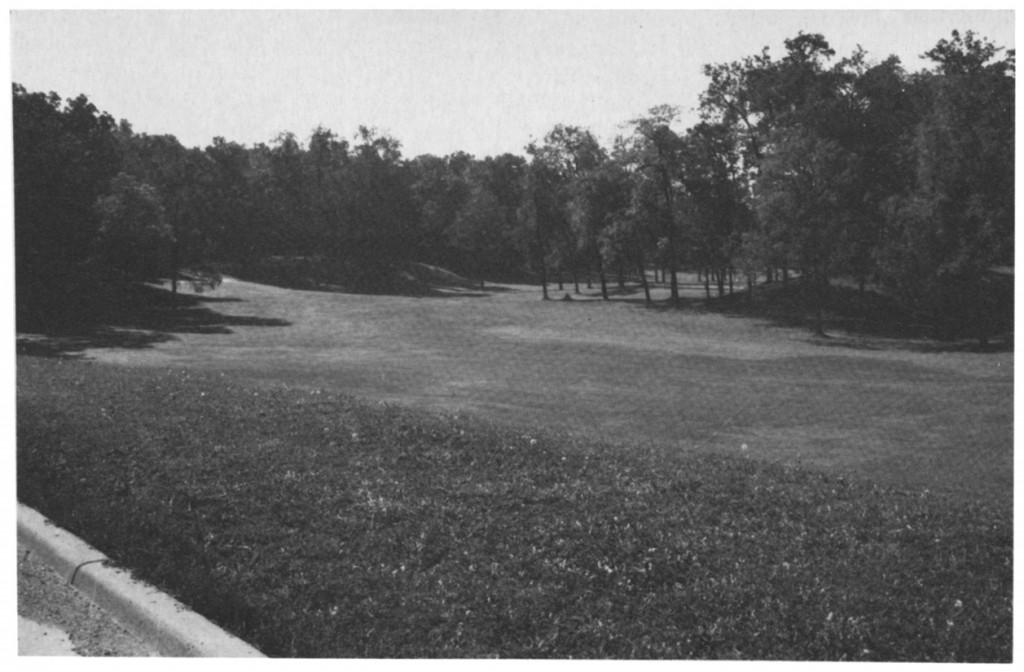

The stimuli sets were {sound}:

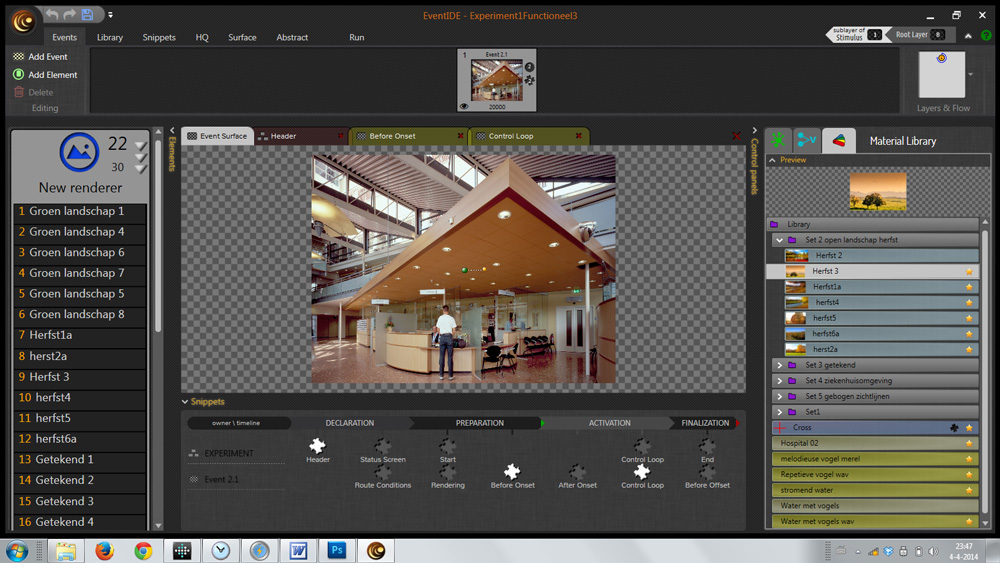

1. Preferred landscape with water element {running water @ 48 Db}

2. Preferred landscape in autumn {repetitive bird calls @ 47 Db}

3. Preferred landscape as abstract painting {melodious birdsong @ 56 Db}

4. Neutral hospital interiors {neutral hospital sounds @ 48 Db}

5. Landscape with deflecting views {running water and melodious birdsong @ 43 Db}

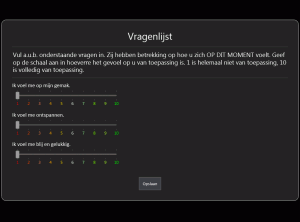

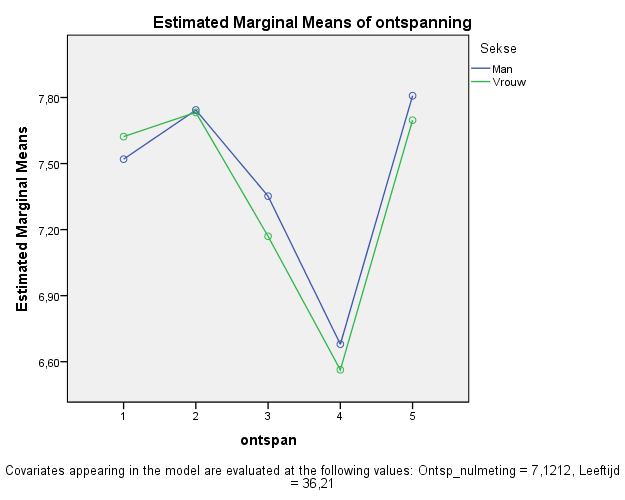

Self-reported relaxation

The three questions we asked after the baseline measurement and after every stimulus set were: I feel at ease, I feel relaxed, I feel joyful and happy. Reported on a scale of 1 to 10. The three questions were merged into a relaxation scale. The hypotheses was that the overall relaxation scale would be lower for the hospital interior set (d) than for all of the landscape sets.

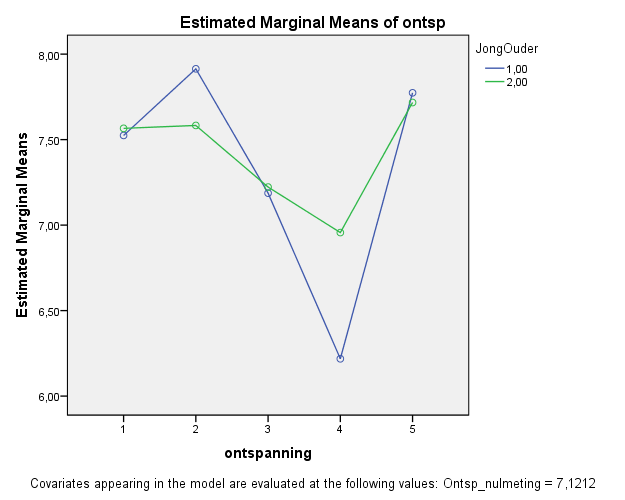

There was a significant effect for relaxation. As you can see from the graph set number four (hospital interiors) shows a distinct decrease of the sense of relaxation. Although the abstract paintings also score lower, this trend is mainly caused by the dip in relaxation scores on the hospital set, this confirms our hypotheses.

There was also something going on with the interaction between age and relaxation. To gain more insight into what’s happening with the age effect I looked at the data and noticed there are two clear groups: 25 years old and younger and above 39 years. The groups are about the same size (young 15, older 18). There were no participants of the age between 25 and 39 years. To test for the significance of the relaxation for the two groups I ran a test that showed that for the young participants the relaxation effect isn’t significant but for the older participants it is.

Heart-rate

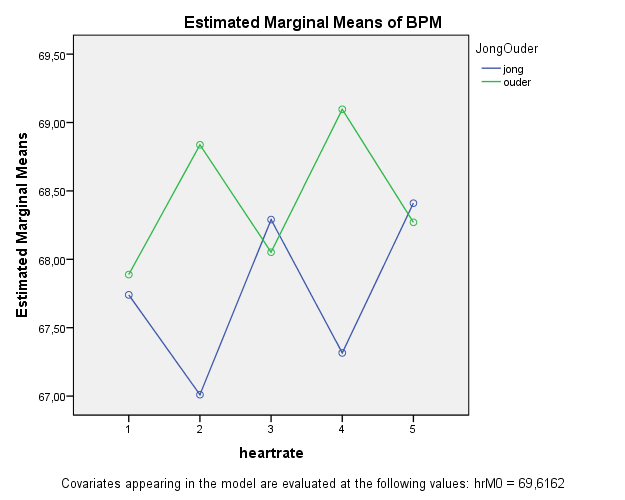

For the heart-rate we used two measures based on the same data: beats per minute (BPM) and inter beat interval (IBI). So it doesn’t make a difference which data analyses I discus here. The hypotheses was that the BPM would be higher for the hospital interior set (d) than for all of the landscape sets.

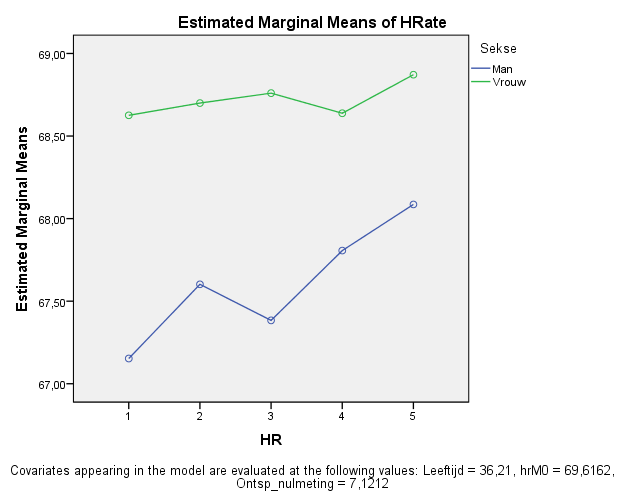

There we no significant differences between the sets. Our hypotheses has to be rejected.

But there is again something going on with age, this time in relation to heart-rate. Looking at the graph below it is clear that the heart-rate in reaction to the landscapes and sounds is at odds for set two and set four. The older and younger people react quite differently.

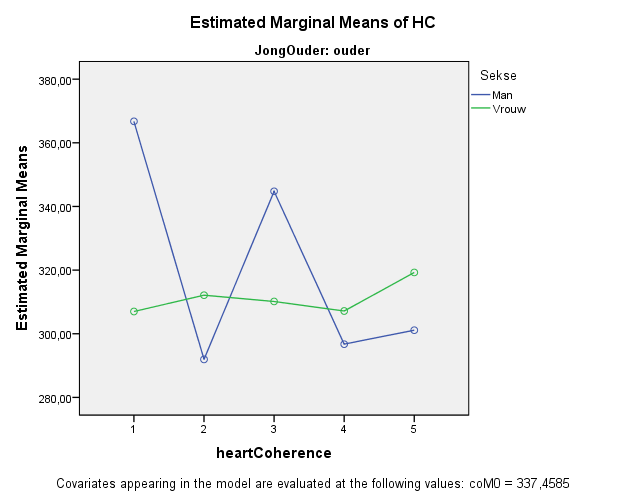

Heart coherence

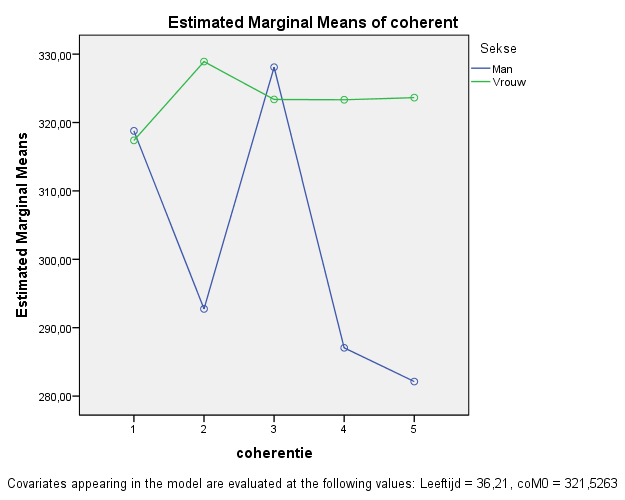

The hypotheses for heart coherence was that the coherence level would be lower for the hospital interior set (4) than for all of the landscape sets.

There is a significant trend for the age coherence interaction. Looking at the graph we can see that the coherence for the women is almost the same over the 5 sets but higher then the baseline coherence measurement. The men show a much more varied response and on average a lot lower then the baseline measurement. It is interesting to note that the abstract painting set, number 3 has a very high score for the men.

Looking a bit deeper into this trend there is again a relation to age. For the younger participants there was no significant difference between the sexes where heart-coherence is concerned. The graph of the older participants shows a significant difference between men and women. The older men cause the interaction-effect between gender and heart-coherence.

So although the average heart-coherence for the hospital interior set (4) is at the lower end for both men and women the effect isn’t convincing in view of the other scores of the other sets. The results don’t support the hypotheses.

Conclusions

For an exploratory first experiment the analysis has yielded some interesting results. The main hypotheses that the self-reported relaxation, heart-coherence, BPM would be lower for the hospital interior set (4) than for all of the landscape sets is partly supported.

The self-reported relaxation and the heart-coherence showed significant results.

The lack of significance for heart-rate may be due to the small group or may suggest that the differences between the sets wasn’t big enough. To influence this I want to reduce the amount of sets in the next experiment and introduce a stress stimulus to create more contrast between the states of the participants.

Judging from the analyses it is clear to me that for next experiment the age should be more homogeneous.

For me the most surprising and promising was the high heart-coherence of the men on the abstract paintings. People were skeptical about using these abstract stimuli as there is not much support in literature that non-realistic images have any effect on viewers. Of course this will require more research but it is an interesting and unexpected result.