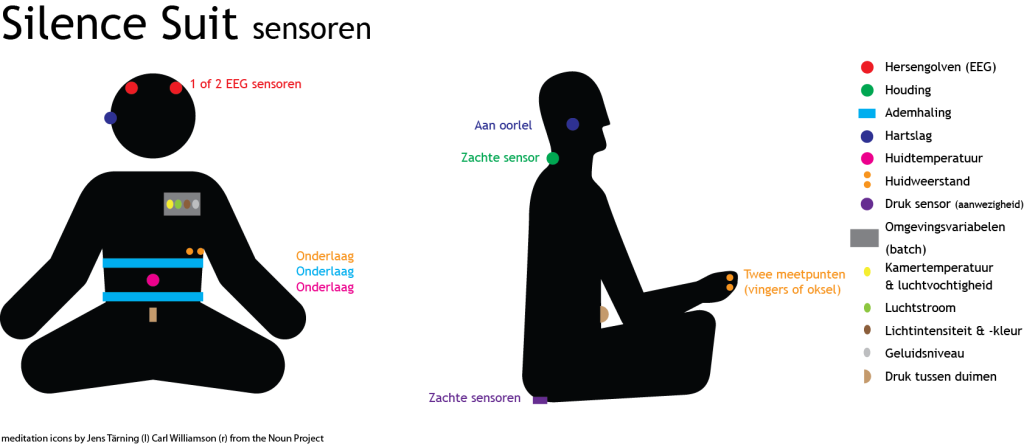

For over a year I’ve been working on a meditation wearable. It measures biometric and environmental input. Its goals is to use the measurements to improve your meditation and use the data to generate artistic visualisations. The wearable is part of a bigger project Hermitage 3.0, a high-tech living environment for 21st century hermits (like me). Now that the wearable project is taking shape I’d like to tell a little about to process of creating it.

The sensors

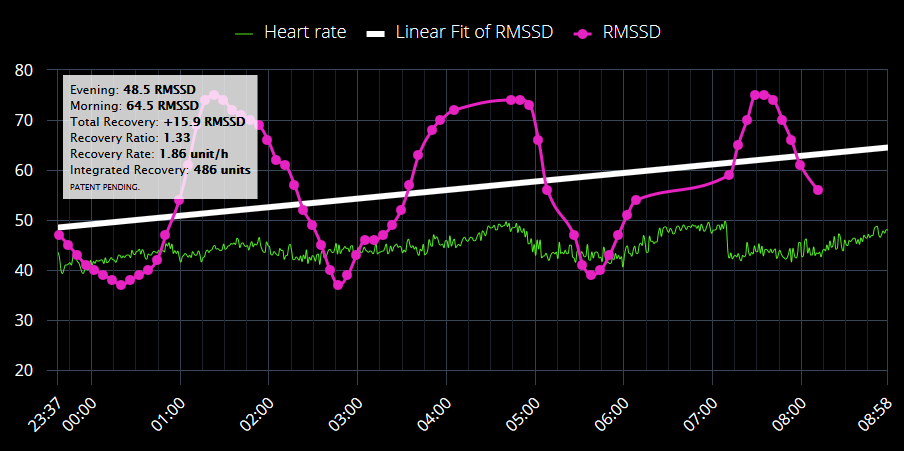

I started with a simple but surprisingly accurate heart-rate sensor. It works with the Arduino platform. It uses an ear-clip and sends out inter beat intervals and beats per minute at every beat. With some additional code in Processing I can calculate heart-rate variability. These are already two important measures that can tell a lot about my state while meditating. Then I added galvanic skin response to measure the sweatiness of my skin, a nice indicator of stress or excitement. I added an analogue temperature sensor that I put on my skin to measure its temperature. Low skin temperature also indicates a state of relaxation. I also made a switch sensor that is attached to my meditation stool. Sitting on it indicates the start a session, getting up marks the end.

All sensors were connected with a wire to my computer but the aim was, of course, to make it wireless so I’d be free to move. But I could already see day to day changes in my measurements.

A little help from my friends

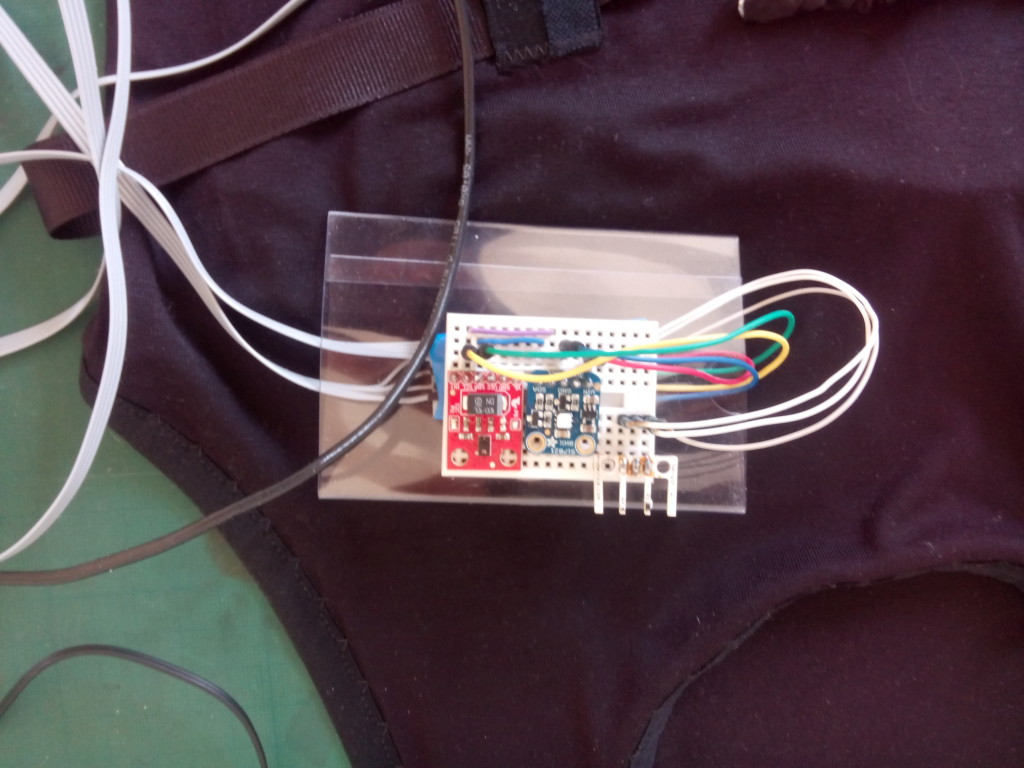

As things were becoming more complex I posted a request for help in a Facebook group. A colleague, Michel offered to help. We first looked at different ways to connect wirelessly. Bluetooth was a problem because it has very short range. Xbee also wasn’t ideal because you need a separate connector. We also made a version where we could write to an SD card on the device. But this of course doesn’t offer live data which was crucial for my plans. We finally settled for WiFi using the Sparkfun Thing Dev ESP8266. We were going to need a lot of analogue pins which the thing dev doesn’t offer. So we used the MCP3008 chip to supply 8 analogue i/o pins.

More is more

We could then increase the amount of sensors. We’ve added an accelerometer for neck position, replaced the analogue skin temperature sensor with a nice and accurate digital one. Around that time a wearable from another project was finished. It is a vest with resistive rubber bands that measures expansion of the chest and belly region. Using the incoming analogue values I can accurately calculate breath-rate and upper and lower respiration. Then it was time to add some environmental sensors. They give more context to for example GSR and skin temp readings. We’ve added room temperature and humidity, light intensity and RGB colour and air flow.

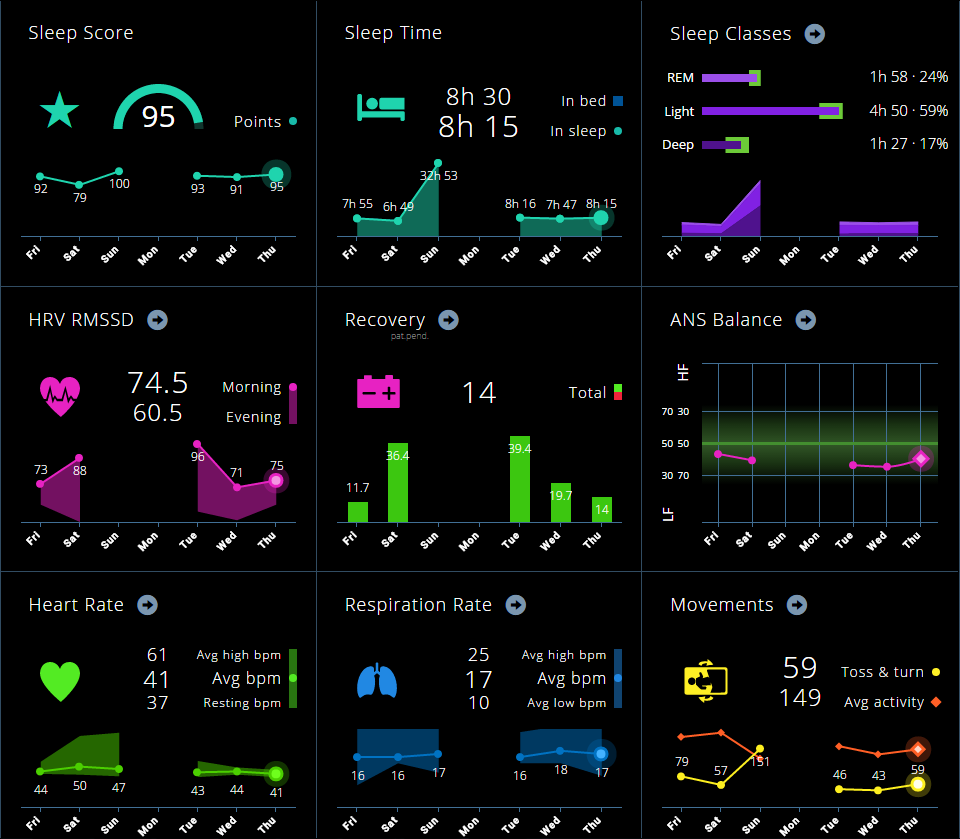

Seeing is believing

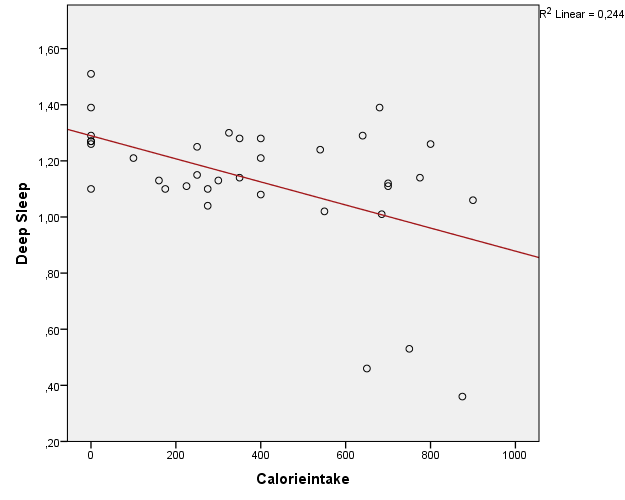

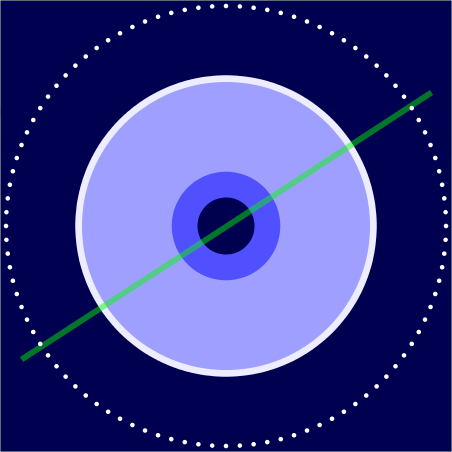

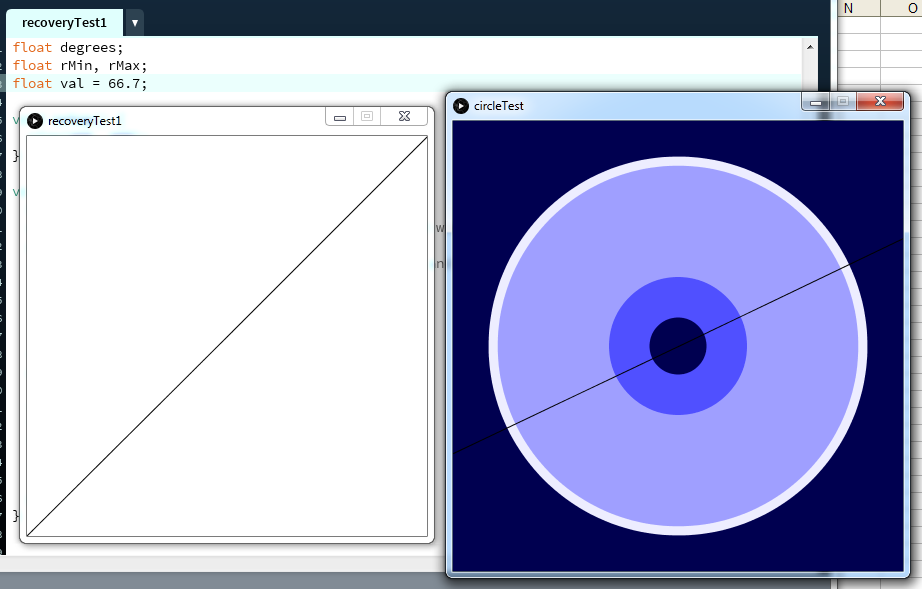

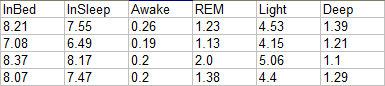

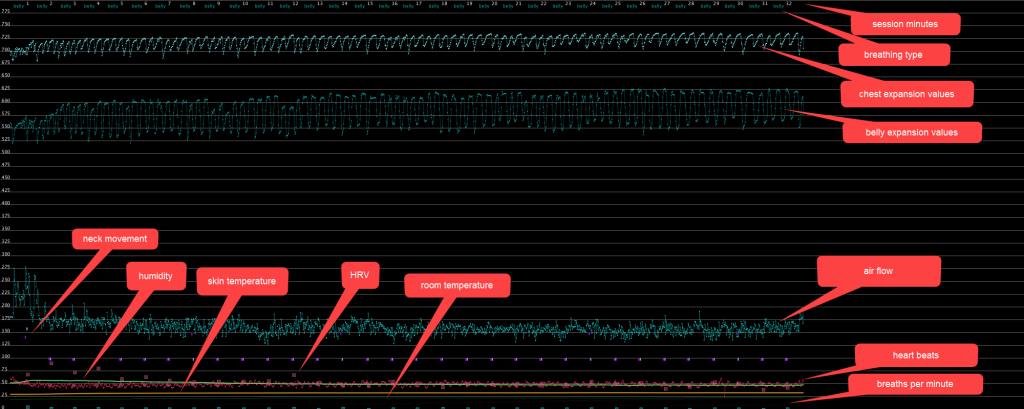

From the start I’ve made simple plots to get a quick insight into the session data. For now they don’t have an artistic purpose but are purely practical. At this point it is still essential to see if all sensors work well together. It’s also nice to get some general insight into how the body behaves during a meditation session.

Data is also stored in a structured text file. It contains minute by minute averages as well as means for the whole session.

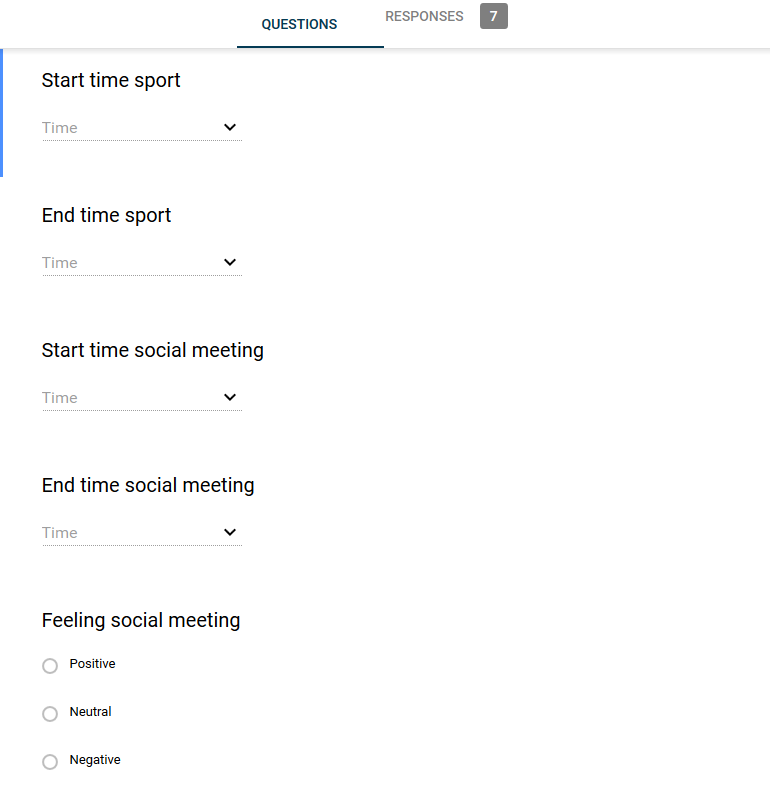

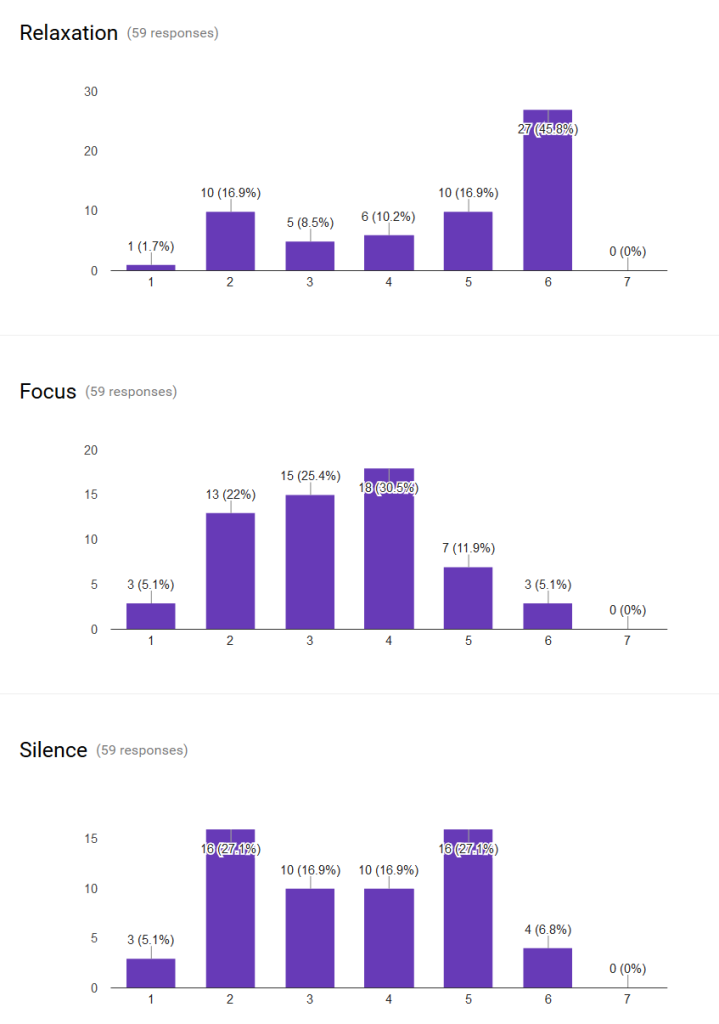

I’ve also made a Google form to track my subjective experience of each session. I rate my focus, relaxation and perceived silence on a 7 point likert scale and there is a text field for a remark about my session.

Suit

I used the vest from the other project to attach the sensors to. But last week costume designer Léanne van Deurzen has made a first sample of the wearable. It was quite a puzzle for her and her interns to figure out the wiring and positioning of every sensor. I really like the look of this first design. It’s fits with the target group: high-tech hermits and it also is very comfortable to wear.

The future

The next step will be adding sensors for measuring hand position and pressure and a sound-level sensor.

Then we will have to make the processing board a bit smaller so it can fit in the suit. We can then start integrating the wiring and replacing it by even more flexible ones.

When all the sensors are integrated I can really start looking at the data and look for interesting ways to explore and understand it.

I’m also looking for ways to fund the making of 15 suits. That way I can start experiments with groups and find ways to optimise meditation by changing the environment.

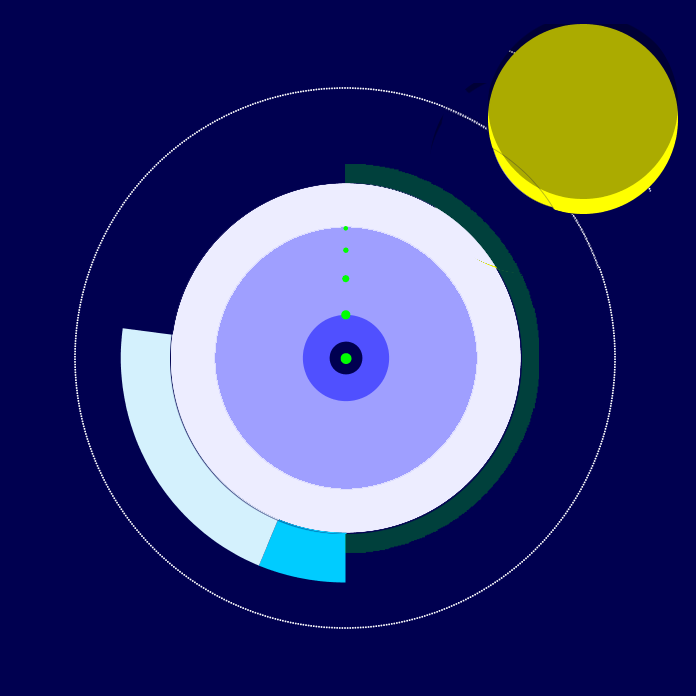

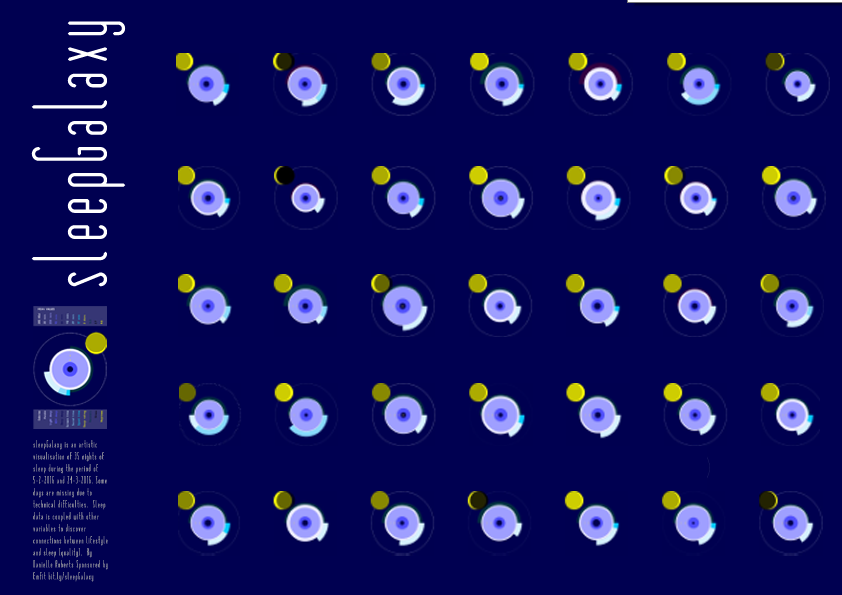

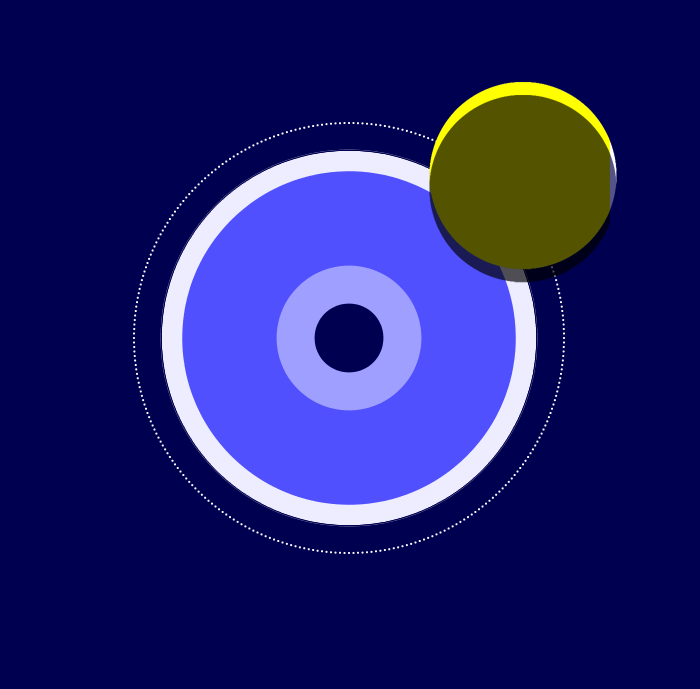

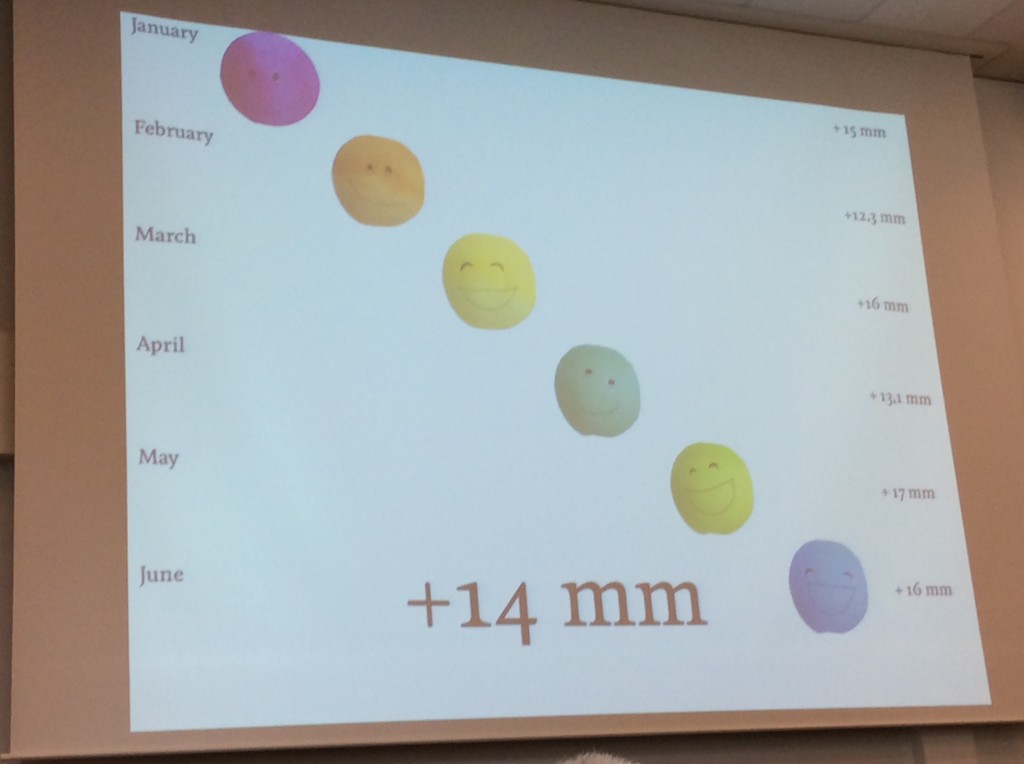

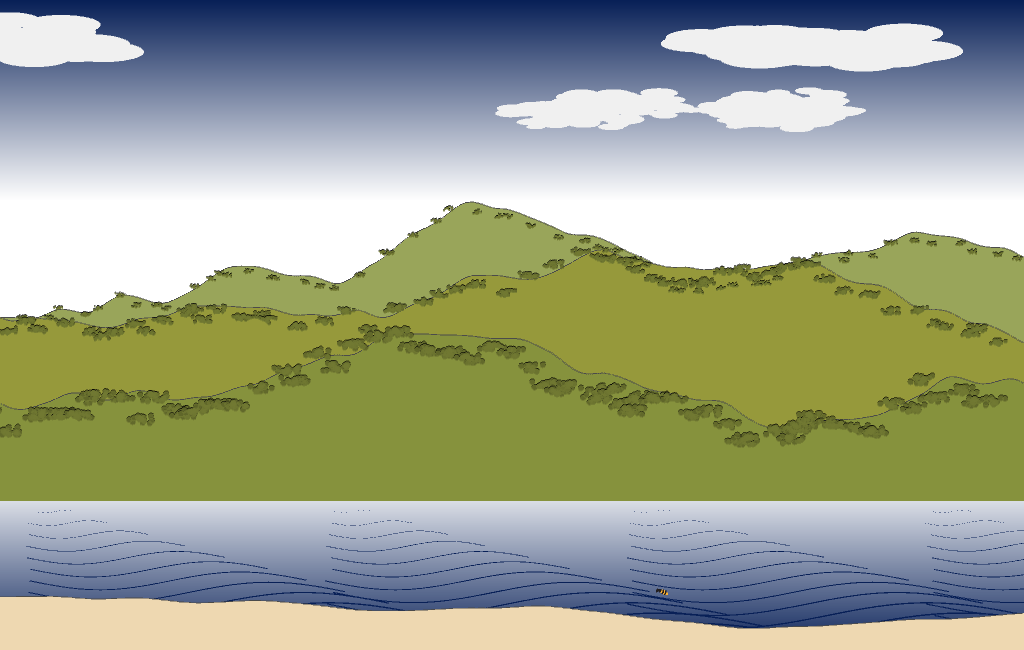

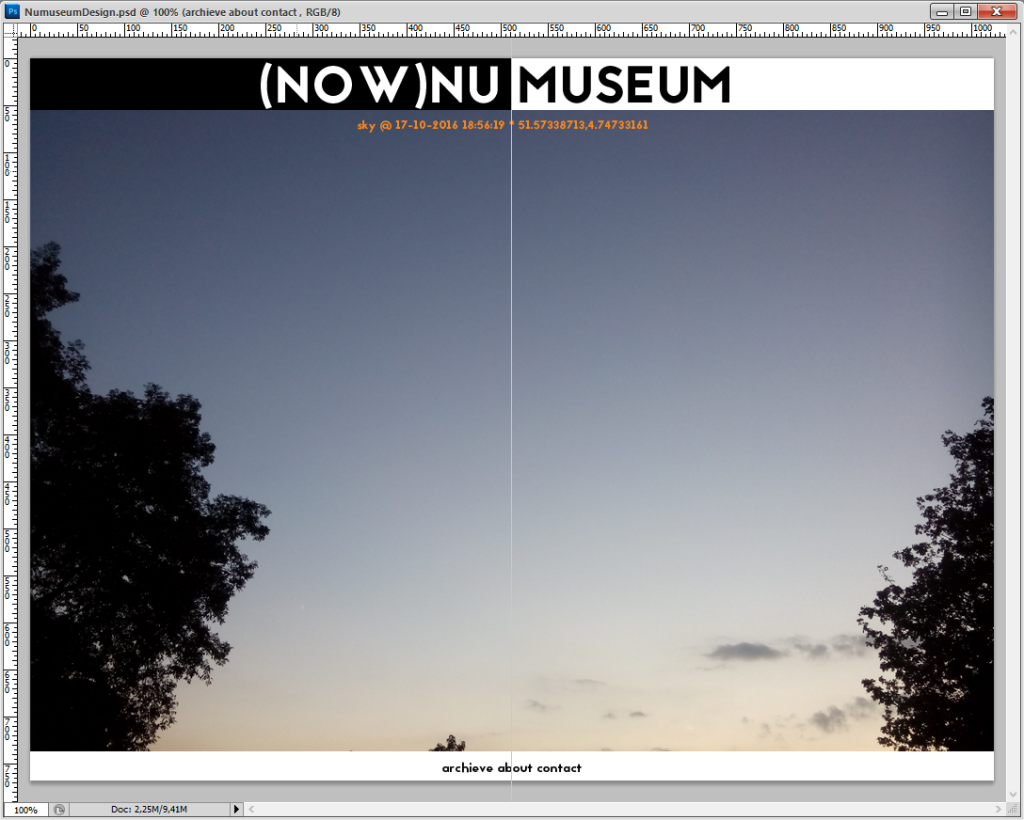

I want to create a now part (“nu” means now in Dutch) and a museum part. Now always shows the most recent data. I’ll start of with a picture of the sky with time and location data. I will overlay that with personal data like mood and heart rate. The museum part will show the now part history in some interactive way.

I want to create a now part (“nu” means now in Dutch) and a museum part. Now always shows the most recent data. I’ll start of with a picture of the sky with time and location data. I will overlay that with personal data like mood and heart rate. The museum part will show the now part history in some interactive way.